Data annotation is having a moment. Between LLM training data and AI governance requirements, everyone’s suddenly talking about documenting their data. But we’ve been implementing systematic annotation for 6 years across 50+ high-growth companies, and the real value isn’t where most people think it is.

It’s actually really simple. Simply document what your existing data means. And treat that documentation layer as part of your core infrastructure.

Everyone’s Talking About Annotation (But Missing the Point)

The current conversation centres on narrow use cases. Teams scramble to label training data for machine learning. Compliance departments document lineage for regulators. AI governance frameworks demand transparency. All important, but this misses the broader operational reality we’ve observed.

Data annotation, properly understood, means embedding business context directly into your data infrastructure. Not just noting that a column contains customer lifetime value, but documenting how it’s calculated, why certain transactions are excluded, which board metrics depend on it, and what should trigger alerts.

O’Reilly’s research across 1,900 practitioners confirms our experience: only 20 per cent of organisations publish data lineage. That means that your analytics team will spent a huge amount of time doing archeology instead of analysis.

The gap creates specific dysfunction. A metric drops 20 per cent overnight. Three analysts investigate for hours. And then find that someone implemented that partner account exclusion finance requested last quarter, but nobody documented the change. The metric was correct; the context was missing.

Event governance exemplifies this gap. Most platforms track what happened but not why it matters. A subscription upgrade event might trigger dozens of downstream calculations. Without business context in the event schema, teams can’t distinguish between a pricing experiment’s success and a bulk migration’s distortion.

At Tasman, we document events with commercial relevance: what triggered the action, expected patterns, revenue impact, and dependencies. When event streams show unusual patterns, documentation immediately clarifies whether it’s celebration or concern. That’s the difference between technical logs and business intelligence infrastructure.

Raw Data to Board Report: The Annotation Layer Cake

Systematic annotation requires thinking in layers, each building on the previous. The temptation is starting with executive dashboards—the visible pain point. But sustainable documentation begins at the foundation.

Source and ingestion documentation forms the bedrock. Every data source has quirks affecting downstream calculations. Stripe webhooks lag during peak processing. Salesforce custom fields contain historical logic from previous migrations. Without documenting these realities, troubleshooting becomes guesswork.

Event governance represents the next critical layer. Modern businesses generate thousands of event types. But raw events without business context create noise, not signal. Document each event’s business meaning, triggers, expected patterns, and downstream impacts. When subscription_modified appears, documentation should clarify which modifications affect revenue recognition versus administrative changes. Look for tools like Avo to really speed up his process.

The transformation layer—where business logic lives—demands the most rigorous documentation. This is where raw data becomes metrics. Consider monthly recurring revenue: seems straightforward until you hit edge cases. Include paused accounts? Handle mid-month upgrades? Credits? Partner accounts? Each decision shapes the metric’s meaning.

The presentation layer requires its own annotation strategy. Business users need to understand not just what metrics show, but limitations, update frequency, and appropriate use cases. A customer acquisition cost optimised for marketing might be inappropriate for board reporting. Without clear documentation, misuse is inevitable.

Making Annotation Systematic, Not Heroic

Documentation heroes don’t scale. Every organisation has that one person who meticulously documents everything. When they leave, quality collapses. Individual excellence can’t substitute for systematic practice.

Creating sustainable annotation requires embedding documentation into development workflows. Pull requests need documentation. Sprint planning includes documentation points. Technical debt includes documentation debt.

Here’s what you could do instead:

- Week 1: Document your ten most critical metrics. Add business context to executive dashboards. Create event taxonomy for core user actions.

- Week 2-4: Document source system quirks. Add business logic to transformations. Build comprehensive event schemas.

- Week 5-8: Automate lineage tracking, quality monitoring, and documentation validation in CI/CD.

Cultural embedding makes it sustainable. Onboarding includes annotation training. Code reviews evaluate documentation quality. Teams track documentation debt like technical debt.

Modern stacks already support this. Snowflake provides native COMMENT functionality. dbt generates documentation from code. Looker supports rich field descriptions. The EDM Council’s CDMC framework identifies 37 capabilities—annotation touches 15 directly.

AI-assisted tools promise 70 per cent time savings, but can’t replace human understanding of business context. Use AI for structure, humans for meaning.

The key is making documentation the path of least resistance. Templates accelerate creation. Validation ensures completeness. Integration with workflows prevents separate tasks. When documenting is easier than not, sustainable practices emerge.

A 30-Day Annotation Sprint

Start with an honest audit. Query your information schema. Count undocumented objects. Identify your most questioned metrics. This baseline quantifies your documentation debt.

Week 1: Audit and Quick Wins

Find undocumented critical paths. Most organisations discover 80 per cent lacks meaningful documentation. Start with the metric everyone questions at board meetings. Document that complex calculation only one person understands.

Week 2: Business Context Injection

Interview stakeholders about what metrics mean, not what they calculate. Document exclusions, edge cases, gotchas. You’ll discover the CMO and CFO define customer acquisition cost differently. Document these discrepancies for resolution.

Week 3: Systematic Implementation

Add comprehensive comments to dbt models. Implement Snowflake COMMENTs. Create a business-friendly data dictionary. Establish quality thresholds triggering alerts.

Week 4: Measure and Iterate

Track time on data discovery before/after. Survey stakeholder confidence. Count Slack questions. Calculate prevented rework hours.

Forcing Functions:

- Make documentation part of “done” definition

- Block deployments without annotation

- Include in sprint planning

- Monthly documentation debt reviews

The Boring Revolution

Data annotation isn’t sexy. It won’t get you conference keynotes about AI innovation. But across our 50+ implementations, it’s the single highest-ROI practice we’ve seen.

The tools exist—every modern platform supports annotation. And the frameworks exist—industry bodies have documented best practices. And the evidence exists as well – e.g. a regional bank achieving 750% ROI with annotation.

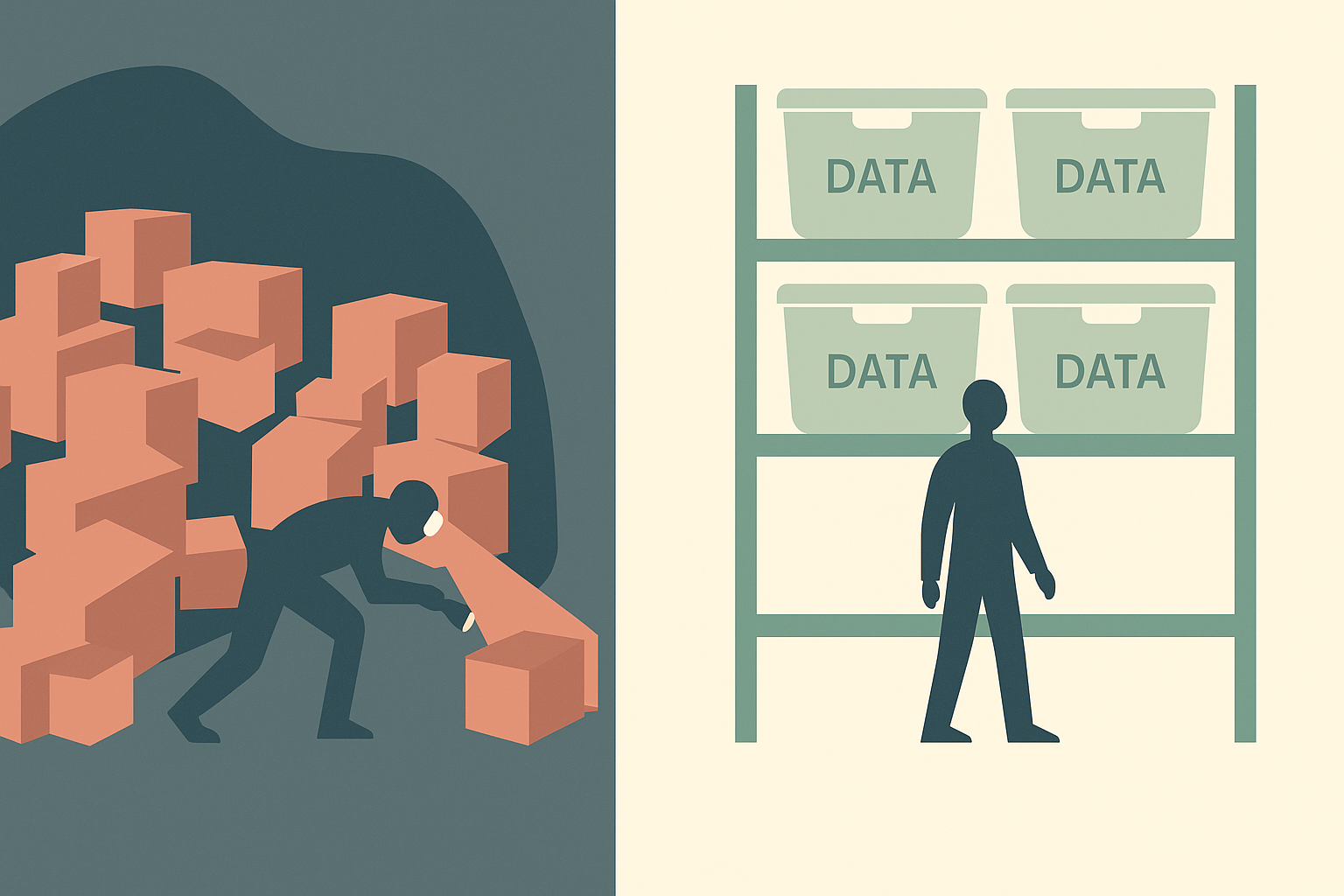

Good data housekeeping through systematic annotation transforms expensive infrastructure into competitive advantage. It’s the difference between data graveyards and decision platforms. Between Excel shadow IT and trusted self-service. Between archaeological expeditions and actual insights.

The only question is whether you’ll implement systematic annotation now, or after another six months of untrusted dashboards and wasted analyst time.

Ready to transform your data platform into a decision platform? Let’s start with your first annotation sprint.

Frequently Asked Questions about Data Annotation

What’s the difference between data annotation and traditional documentation?

Traditional documentation typically lives in wikis or confluence pages—separate from your data infrastructure and quickly outdated. Data annotation embeds business context directly into your data platform using native features like Snowflake COMMENTs and dbt documentation. This means documentation lives with the code, updates through CI/CD pipelines, and appears wherever users encounter the data. The regional bank achieving 750% ROI didn’t create more documents—they embedded meaning into their infrastructure.

How long does it take to implement systematic data annotation?

Our 30-day sprint framework delivers measurable results within four weeks. Week one focuses on documenting your ten most critical metrics. By week four, you’re tracking prevented rework hours and reduced discovery time. Full implementation across a typical data estate (500-1,000 objects) takes 3-6 months, but ROI appears immediately. Teams typically see 40% reduction in “what does this mean?” questions within the first sprint.

Which tools support data annotation, and do we need new software?

Your existing stack likely supports annotation already. Snowflake provides COMMENT functionality on every object. BigQuery offers schema descriptions. dbt generates documentation from YAML files. Looker, Tableau, and PowerBI all support field-level descriptions. Specialised tools like Avo accelerate event governance, but aren’t required. The investment isn’t in tools—it’s in systematic practice.

What’s the actual ROI of data annotation for scale-ups?

Beyond the 750% ROI case study, we consistently see 15-20 hours weekly saved on data discovery across our implementations. For a team of five analysts at £70,000 annually, that’s £84,000 in prevented archaeology. Add reduced rework from misunderstood metrics, faster onboarding, and increased stakeholder trust, and annotation typically pays for itself within eight weeks. The question isn’t whether to annotate, but how much you’re currently wasting without it.